Simon Glairy is a highly esteemed expert in the insurance and Insurtech fields, recognized for his specialized focus on risk management and AI-driven risk assessments. With the increasing integration of artificial intelligence (AI) in the insurance sector, Glairy’s insights provide valuable guidance on navigating this complex, evolving landscape.

Can you tell us about the current state of AI adoption among Dutch insurers and some of the common applications of AI in the insurance sector?

AI adoption among Dutch insurers is progressing, with about 15 out of 36 insurers already incorporating AI into their business processes. Common applications include analyzing unstructured data for risk assessment, developing personalized product recommendations, implementing fraud detection mechanisms, and automating claims processing. Primarily, insurers use AI to improve operational efficiency and enhance the customer experience.

What major obstacles are insurers facing in their AI development? How significant are issues related to internal expertise and data infrastructure?

Insurers face several significant hurdles in AI development. Insufficient internal expertise is a prominent challenge, leaving companies struggling to leverage AI effectively. Additionally, foundational issues with data infrastructure and data quality impede progress, as robust AI systems depend heavily on high-quality, well-structured data.

What type of risks do insurers associate with AI implementation? How do non-financial risks compare to financial risks in this context?

Insurers often worry about non-financial risks, such as reputational damage and business continuity issues, which they perceive as more pressing than direct financial risks. There’s a fear that opaque AI-driven decisions might erode customer trust or go against ethical norms. However, the DNB also highlights the prudential risks, particularly in AI applications like asset allocation, where unexpected model behaviors could lead to significant financial repercussions.

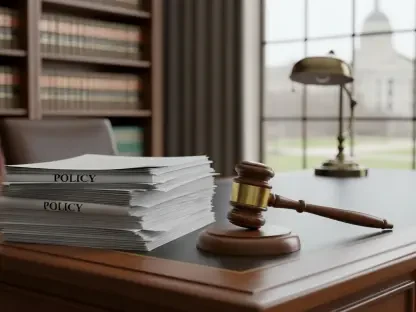

How are existing legal and regulatory requirements impacting AI deployment in insurance? What additional requirements does the European Union’s AI Act impose?

Existing regulations already require AI solutions to adhere to data protection laws, consumer protection statutes, anti-discrimination provisions, and Solvency II governance standards. The European Union’s AI Act adds more specific requirements for high-risk AI systems, demanding rigorous risk assessments and human oversight. This comprehensive legal framework ensures that AI deployments don’t compromise compliance or consumer safety.

Can you explain the SAFEST principles outlined by DNB? Why is ‘soundness’ considered paramount among these principles?

The SAFEST principles—soundness, accountability, fairness, ethics, skills, and transparency—form the foundation of responsible AI use. ‘Soundness’ is paramount because it ensures technological robustness, reliability, and compliance with regulations. DNB emphasizes that unsound AI models could pose systemic risks if widely adopted, hence the need for stringent testing and validation.

What specific measures should insurers take to comply with DNB’s guidance? Why is the creation of a detailed inventory of current AI systems essential?

Insurers should establish clear AI strategies and governance structures, including dedicated AI committees and comprehensive policies. Creating a detailed inventory of AI systems is crucial for identifying which applications need to comply with new regulations, particularly those labeled as high-risk under the AI Act. This systematic approach helps in early compliance planning and avoiding regulatory pitfalls.

What are the risks associated with third-party AI systems? How should insurers control these risks effectively?

Third-party AI systems introduce risks related to data quality, performance, and regulatory compliance. Insurers must perform thorough due diligence, incorporate contractual safeguards addressing these areas, and regularly audit third-party systems to ensure they meet strict standards. Effective oversight of vendor-provided AI solutions is critical in maintaining control over these external risks.

How should insurers engage with regulators and industry initiatives as AI oversight evolves? What does DNB’s plan for more in-depth examinations in 2025 imply for insurers?

Insurers need to maintain open communication with regulators and participate actively in industry initiatives to stay ahead of evolving AI oversight. DNB’s plans for in-depth examinations in 2025 indicate increasing scrutiny and highlight the importance of proactive compliance. Insurers must prepare for these evaluations by ensuring their AI systems are robust and well-governed.

Why is maintaining ethical AI practices important for insurers? How can insurers integrate ethical considerations into their AI strategies?

Ethical AI practices are essential in maintaining public trust and ensuring fair treatment of customers. Insurers can integrate ethics by conducting bias audits, using diverse datasets, and reviewing the societal impacts of AI-driven decisions. Establishing ethical AI committees and adhering to industry frameworks like the Dutch Association of Insurers’ Ethical Framework for Data-Driven Applications can guide these efforts.

What constitutes a clear AI strategy and governance structure for insurers? How can insurers embed the SAFEST principles into their operations effectively?

A clear AI strategy involves defining corporate goals for AI, establishing dedicated committees, and adopting comprehensive policies governing AI use. Embedding SAFEST principles requires rigorous testing, clear accountability measures, fairness audits, continual ethics reviews, investing in AI training, and maintaining transparency with stakeholders and regulators. This rigorous approach ensures responsible and compliant AI deployment.

How will strengthening AI oversight affect the insurance sector in the long run? What advantages do insurers gain by aligning proactively with new regulatory guidance?

Strengthening AI oversight will enhance the industry’s resilience and public trust, mitigating risks associated with emerging technologies. By aligning proactively with regulatory guidance, insurers can avoid compliance issues, foster innovation within safe boundaries, and position themselves as leaders in responsible AI use. This strategic alignment can offer a competitive edge, ensuring they capitalize on AI’s benefits while safeguarding against potential pitfalls.