In the rapidly evolving landscape of artificial intelligence, insurers are confronted with the perplexing challenge of identifying and managing the ambiguous risks posed by AI within their coverage frameworks. This uncertainty arises from the concept of “silent AI,” where AI-driven risks are neither explicitly included nor excluded in insurance policies. This lack of clarity can lead to unintended coverage gaps, resulting in unforeseen liabilities and financial losses. With AI technology advancing at a remarkable pace, insurers must grapple with the complexities inherent in integrating AI-related risks into their existing policies, much like the earlier challenge of “silent cyber,” which involved dealing with claims stemming from cyber incidents not clearly addressed by traditional insurance policies. Silent AI presents a similar obstacle and calls for insurers’ immediate attention as AI begins to play a more central role in professional and commercial spheres.

The comparison to silent cyber underlines the urgency of this issue; as AI continues to develop, insurers will increasingly face scenarios where AI-related losses occur under non-AI policies. Such situations could become costly if not managed effectively. The challenge is further complicated by the diverse range of applications and impacts of AI, from software errors to liability disputes, and even ethical concerns such as bias and discrimination. As a result, insurers must swiftly adapt and evolve their coverage frameworks to address the potential pitfalls accompanying AI’s integration into myriad industries and sectors.

The Complexity of Silent AI

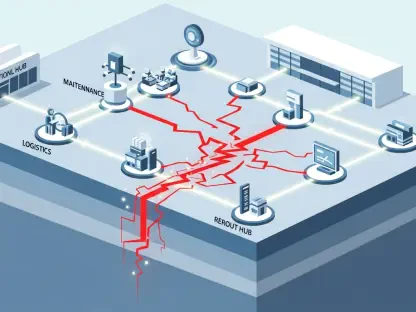

Silent AI introduces several complexities that challenge traditional insurance structures. The multifaceted nature of AI technology means that it can manifest in diverse forms, blurring the line between software and professional service. This ambiguity poses foundational dilemmas for liability assessment within insurance policies. For instance, an AI system dedicated to processing financial transactions could make undetected errors leading to substantial financial discrepancies. If such events transpire, determining the nature of the error—whether rooted in human programming or intrinsic to the AI’s operational capacity—becomes crucial. Insurers must interrogate policy terms to ensure clear demarcations of coverage regarding AI-related incidents without leaving the insured parties at risk.

Insurers are tasked with anticipating AI risks, identifying potential loopholes in coverage, and establishing clearer guidelines for policyholders. This involves ongoing collaboration with AI developers and businesses to understand AI intricacies and implications. Only with an expansive understanding can insurers construct meaningful coverage that protects against unseen AI liabilities. Furthermore, insurers ought to prepare for scenarios where AI technology causes ethical dilemmas, such as discriminatory biases or decision-making patterns that affect policyholders’ rights or privileges. These nuances add weight to the need for comprehensive risk assessments that permeate across industry sectors and technology applications.

Addressing Ambiguities through Policy Adaptation

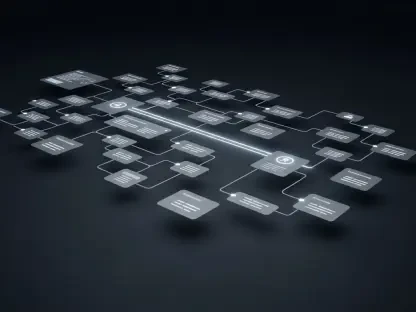

Future trends command insurers to actively review and recalibrate their policy frameworks to explicitly incorporate AI-related risks. In doing so, it’s essential for insurers to scrutinize both exclusions and gaps within existing policies to determine whether AI-driven errors warrant coverage under traditional indemnity clauses or necessitate specific endorsements. The incorporation of AI amendments into insurance contracts should encompass not only potential technical failures but also the legal and ethical implications of AI use. Engaging policyholders in dialogue concerning AI applications is pivotal, allowing insurers to discern whether incidents stem from human errors or from AI shortcomings.

In crafting robust policies, insurers may need to embolden their underwriting teams with AI risk specialists who can map out potential exposure points and recommend appropriate mitigations. By fostering such expertise internally, insurers become more educated on policyholder needs and prevailing technological trends. Notably, regulations and governance must also be enhanced, resting on frameworks aligned with rapidly transforming digital landscapes. Collaborative regulatory efforts, evidenced by initiatives such as the UK’s AI Regulation White Paper, highlight the pressing demand for governmental input and oversight in defining AI responsibilities and liabilities within the insurance sector. Such measures will be instrumental in standardizing approaches and ensuring uniformity across policy interpretations.

Developing Standards and Future Considerations

In the swiftly changing realm of artificial intelligence, insurers face a daunting task: pinpointing and managing the ambiguous risks AI presents in their coverage plans. This uncertainty springs from “silent AI,” where AI-driven risks aren’t explicitly recognized or excluded in insurance policies, potentially causing coverage gaps and unforeseen liabilities. With AI advancing rapidly, insurers must tackle the intricacies of weaving AI-related risks into current policies. Like “silent cyber,” which demanded addressing claims from cyber incidents overlooked by traditional policies, silent AI poses a similar challenge. As AI increasingly influences professional and commercial domains, insurers must prioritize this issue. Drawing parallels with silent cyber highlights the urgency; as AI advances, insurers could face losses under non-AI policies. If unaddressed, these can be costly amid varied AI applications and effects, from software glitches to ethical concerns. Insurers need to rapidly adapt their frameworks to navigate AI’s integration across industries and sectors.