The widespread adoption of generative artificial intelligence has unlocked unprecedented efficiencies for businesses, but it has simultaneously introduced a complex new frontier of risk that is causing cyber insurance underwriters to dramatically intensify their scrutiny. Companies seeking coverage are now finding that their traditional cybersecurity postures are no longer sufficient; they must also provide a transparent and robust account of their AI governance and risk mitigation strategies. This shift marks a pivotal moment where securing adequate cyber insurance is becoming contingent on a company’s ability to demonstrate a sophisticated understanding of AI-specific vulnerabilities, from intellectual property infringement and data integrity issues to novel threats like prompt injection attacks. As underwriters dig deeper into how organizations use AI, manage vendor relationships, and update incident response plans, the pressure is mounting for businesses to formalize their approach to this transformative technology or risk facing higher premiums, reduced coverage, or outright denial.

The Evolving Landscape of Underwriting

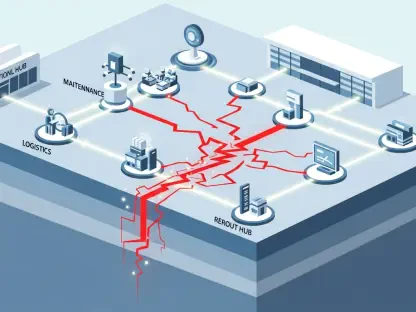

Insurers are fundamentally reshaping their assessment criteria, moving beyond a simple checklist of firewalls and antivirus software to a more nuanced evaluation of how companies integrate and control AI systems. Underwriters are now asking pointed questions about the specific generative AI tools in use, the nature of the data being processed, and the contractual liabilities established with third-party AI vendors. They are particularly interested in seeing evidence of updated incident response policies that explicitly address AI-related scenarios, such as data poisoning or algorithmic bias that leads to reputational or financial harm. This deeper dive is predicated on the understanding that AI introduces unique threat vectors that are not adequately covered by conventional security measures. A company that can provide a comprehensive account of its AI usage, outlining the identified risks and the corresponding controls, is in a much stronger position to negotiate favorable insurance terms. This new underwriting paradigm reflects a market that is still in the early stages of pricing AI risk but is moving quickly to reward proactive governance.

This heightened scrutiny from insurers is not occurring in a vacuum; it is paralleled by a rapidly crystallizing legal and regulatory framework that is formalizing AI risk management as a matter of compliance. Lawmakers are no longer on the sidelines, with states like California, Colorado, Utah, and Texas having already passed significant AI-related legislation. This growing body of law addresses critical concerns such as data privacy, algorithmic discrimination, and transparency requirements. Furthermore, the legal system is actively grappling with the fallout from AI deployment, as evidenced by over 200 active court cases tackling issues of AI-driven data bias and intellectual property disputes. For businesses, this means the pressure to manage AI risk is coming from multiple directions. Failure to implement a strong governance framework not only jeopardizes insurance coverage but also exposes the organization to significant legal and regulatory penalties, making a comprehensive AI risk strategy an essential component of modern corporate stewardship.

Proactive Strategies for Securing Coverage

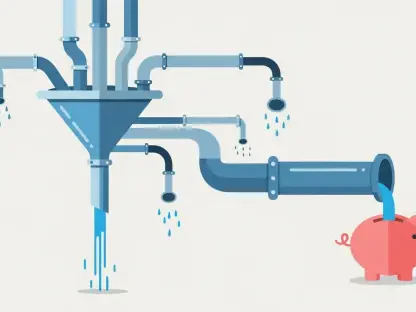

To successfully navigate this challenging new environment, organizations must adopt a proactive and strategic approach to risk mitigation that goes beyond mere compliance. The first step involves a thorough internal assessment to define the company’s specific risk tolerance for AI-related incidents. This critical exercise helps determine which potential losses the business is willing to self-insure and which risks are significant enough to transfer to an insurance provider. Once this tolerance is established, companies can employ sophisticated methods to determine the appropriate level of coverage. One key practice is benchmarking, which involves comparing insurance limits against those of industry peers of a similar size and risk profile. Another, more tailored approach is “limit modeling.” This process requires the organization to identify plausible AI-related loss scenarios—such as a major data breach caused by a compromised AI model or a significant business interruption from a failed AI system—and then scale the potential financial impact to its specific operations, allowing for an informed, data-driven decision on how much insurance to purchase.

A Forward-Looking Approach to AI Risk

Ultimately, navigating the new realities of the cyber insurance market requires organizations to fundamentally integrate AI governance into their core risk management frameworks. Businesses that successfully secure comprehensive coverage are those that move beyond a reactive posture and instead proactively model their unique AI-related exposures. They benchmark their strategies not just against industry peers but against a forward-looking view of the evolving legal and technological landscape. By clearly defining their risk tolerance and using detailed limit modeling to align their insurance purchases with specific financial vulnerabilities, these companies can provide underwriters with the transparent and robust accounting they demand. This strategic alignment demonstrates a mature understanding of the challenges and establishes the foundation for a resilient and insurable operational model in an era increasingly defined by artificial intelligence.